Tournament Statistics

I’m pretty sure you all like numbers a lot. Even more so if they’re used to count stuff:

We printed and scanned about 1000 Scoring Guidelines, almost as many A4 pages with results and previews and another 900 pages of the report on the problems for 2013. There were 18 Beamers in use in the fight rooms, another two in our IT department, and about 5 spare. The fight assistants double checked every grade, we then again checked whether the numbers on the SGs and those input to newtoon are the same. Adding this up there’s been about 7500 grades checked. The combination of the German IT (setting up their own network and server, cloning a special Gentoo installation to 20 Laptops), the Chinese volunteers that did a great job as fight administrators and our new software worked almost flawlessly.

Well, and then there’s the actual tournament statistics, starting with the results.

This graphic shows what problems were presented and rejected:

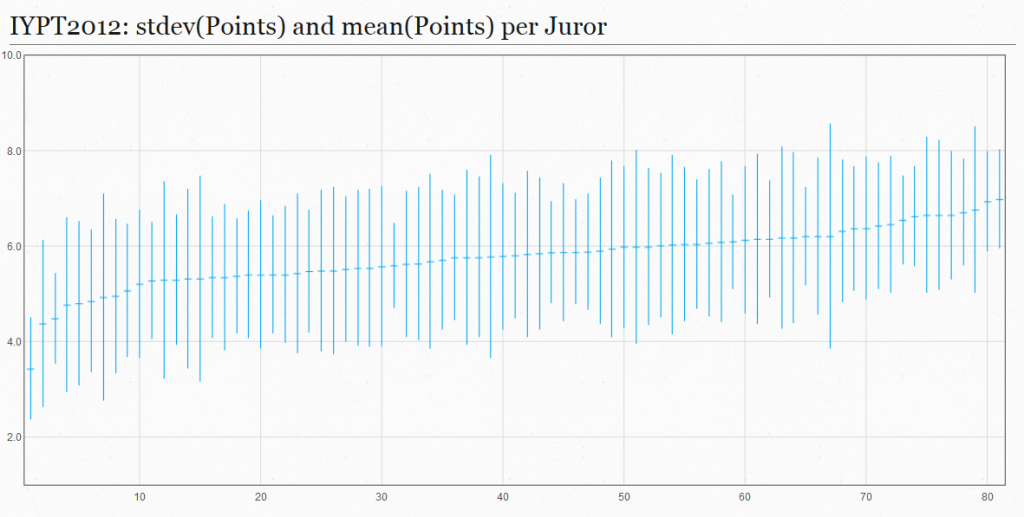

And I’m sure you want to know what the grades looked like – here’s the jurors’ means and standard deviation:

Also, in case you wonder if the jurors were less homogeneous in their grading: Here’s the mean of std. devs and of min-max-differences for each performance:

IYPT2010: 0.8058 / 2.3137

IYPT2011: 0.9094 / 2.5206

IYPT2012: 0.8739 / 2.4333

So no, the grades weren’t too inconsistent, even more consistent than last year.

When Martin Plesch talked to the Jury, he was hoping for an overall mean of 5.5 (in the center of our scale) and a reasonable standard-deviation, somewhere around 1.5. Here’s the number for the IYPT2012:

Mean of Juror means: 5.82

Mean of Juror Stddev: 1.54

So our Jurors did a pretty good job I’d say!

This entry was posted in blog. Bookmark the permalink.July 24., 2012 14:15

← Final: Korea, Singapore, Iran (Deutsch) Beim Physik-Weltcup der Schüler begegnen sich 28 Nationen →

Leave a Reply